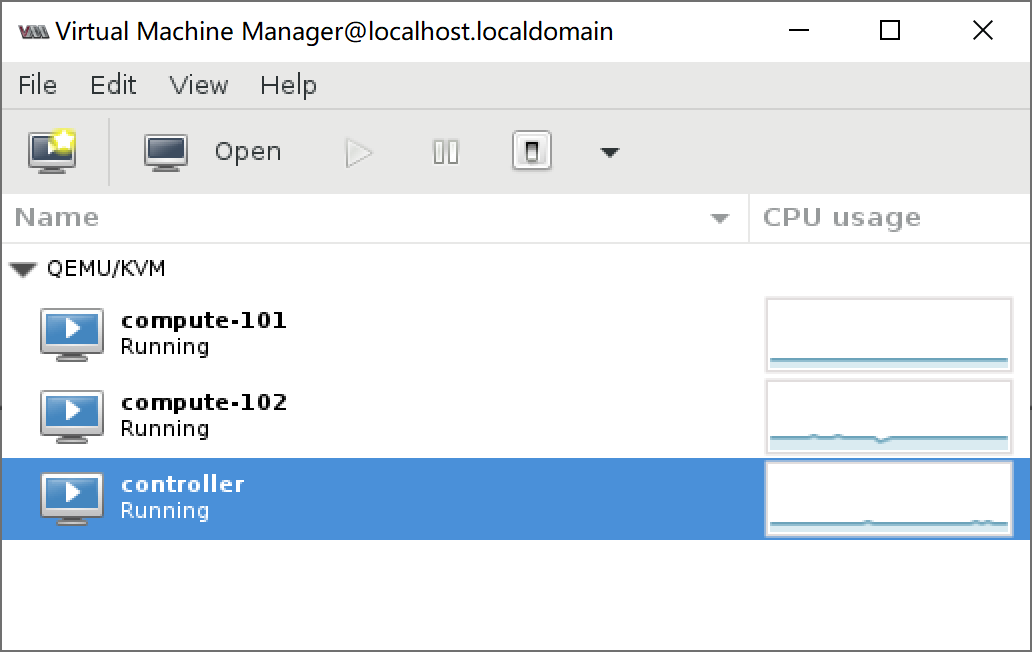

近期公司业务需求,需要安装一套Openstack环境学习,看了一下现在已经出了wallaby版了,我果断选择了上一个版本victoria。因为没有足够多的物理服务器了,只好找了一台64核256G内存6T硬盘的机器来创建几台虚拟机来搭环境了。

实验环境

此次实验使用到了三台虚拟机,都是使用centos8系统,一台机器当作控制和网络节点,另外两台当作计算节点,使用OVS+VLAN的网络模式,eth0作为管理网络,eth1互相连接到OVS网桥上模拟trunk网卡,controller多增加一个eth2用于访问外部网络。

| 节点 | 作用 | eth0 | eth1 | eth2 |

|---|---|---|---|---|

| controller | 控制节点、网络节点 | 172.16.10.100 | 无IP | 桥接,无IP |

| compute-101 | 计算节点 | 172.16.10.101 | 无IP | ❌ |

| compute-102 | 计算节点 | 172.16.10.102 | 无IP | ❌ |

安装虚拟机

安装依赖

安装KVM和Linux网桥

yum install -y qemu-kvm libvirt virt-install bridge-utils virt-manager dejavu-lgc-sans-fonts

dejavu-lgc-sans-fonts用于解决virt-manaer乱码

启动

systemctl enable libvirtd && systemctl start libvirtd

安装OVS

yum install openvswitch

启动OVS

systemctl enable openvswitch && systemctl start openvswitch

创建虚拟机

使用 virt-manager 创建三台虚拟机

配置网络

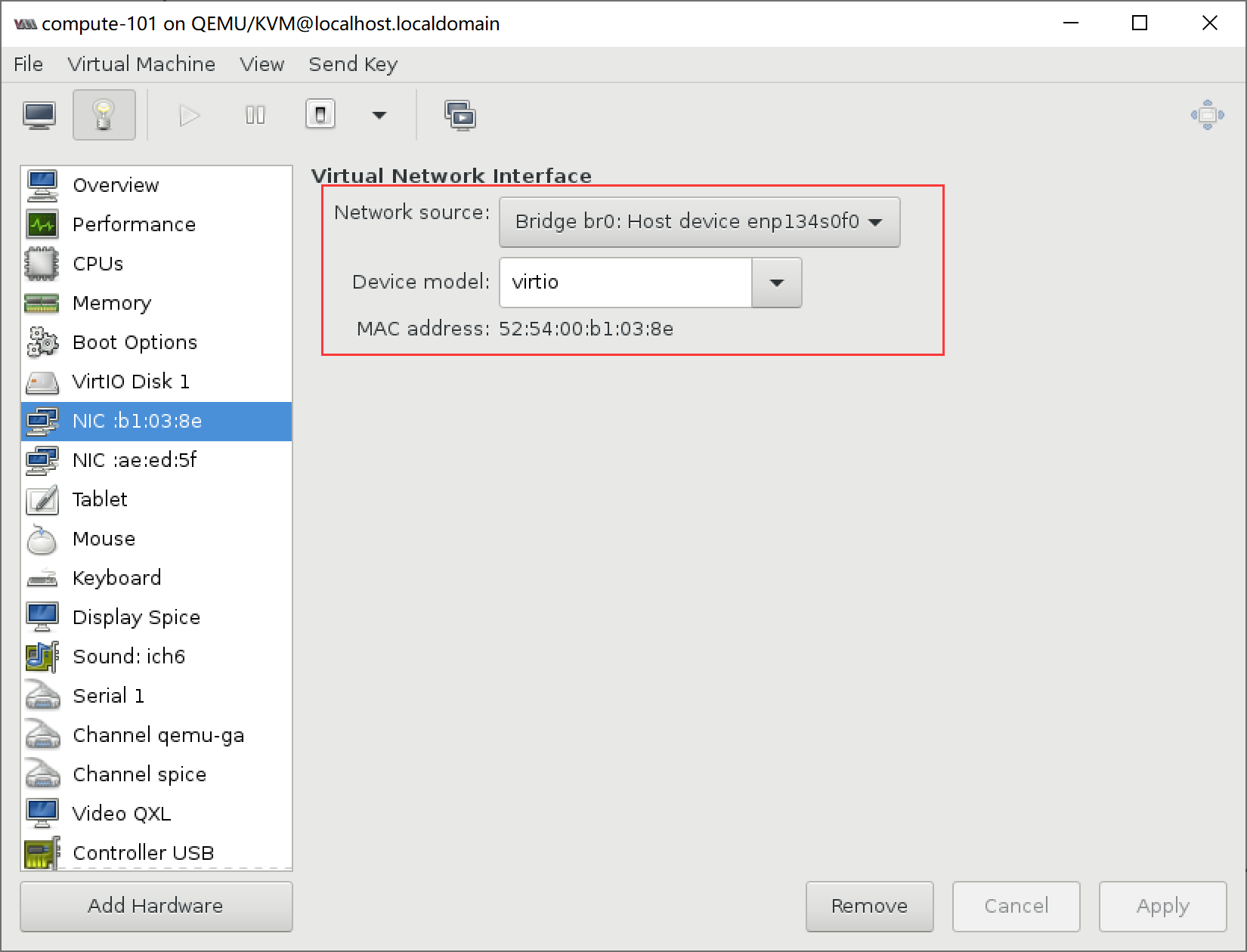

配置管理网卡

给虚拟机配置桥接网络,参考Linux虚拟化技术KVM,效果如图

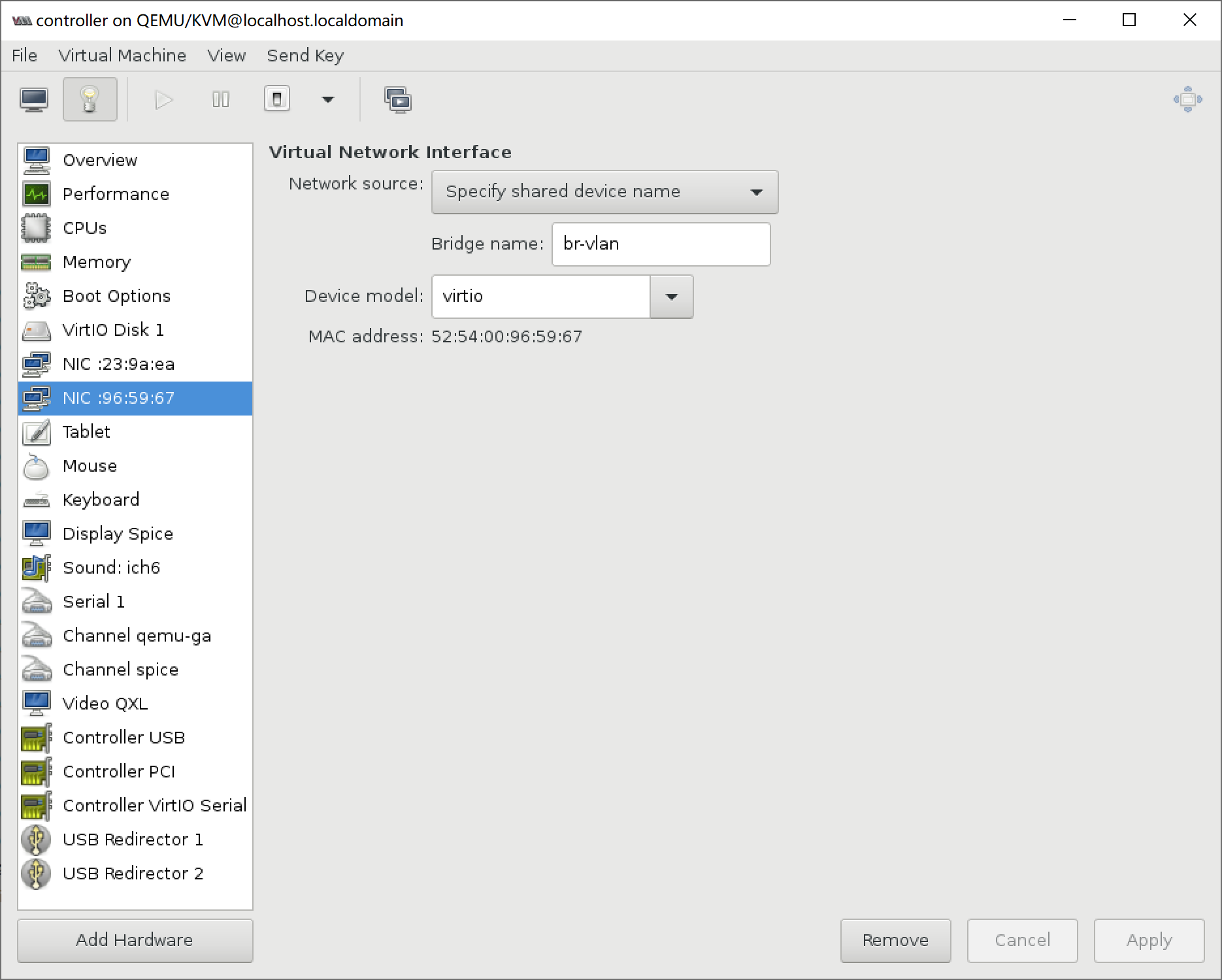

配置trunk网卡

使用ovs创建一个虚拟网桥。

ovs-vsctl add-br br-vlan

此时网桥br-vlan上是没有任何虚拟网卡的,然后关闭虚拟机,在virt-manager上添加一个网络设备

使用命令找到虚拟机并编辑虚拟机XML文件。

virsh list --all

virsh edit controller

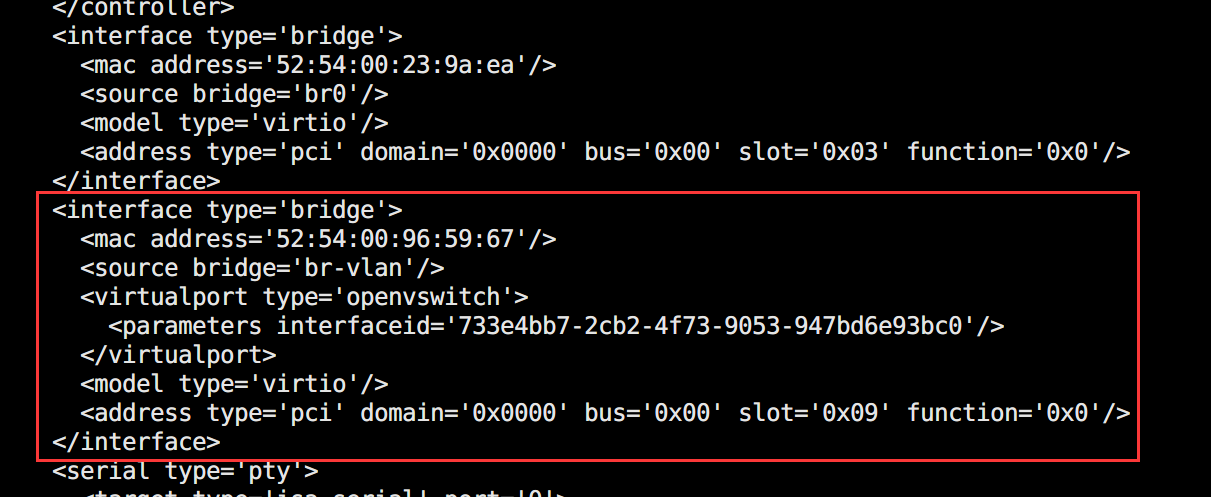

找到对应的 interface 元素,在 source 和 model中间添加一行。

<virtualport type='openvswitch' />

然后保存退出,启动虚拟机,启动成功之后会 virtualport 中间生成一个新的元素。

确认是否添加成功。

ovs-vsctl show

成功的话可以在网桥上看到自动生成的几个虚拟网卡。

Bridge br-vlan

Port br-vlan

Interface br-vlan

type: internal

Port "vnet1"

Interface "vnet1"

Port "vnet2"

Interface "vnet2"

Port "vnet3"

Interface "vnet3"

ovs_version: "2.9.0"

以上步骤重复几次以完成其他虚拟机的配置。

安装Openstack

配置环境

全部节点都需要操作。

切换网络服务为 network-scripts

# 安装Network服务

yum install network-scripts -y

# 停用NetworkManager并禁止开机启动

systemctl stop NetworkManager && systemctl disable NetworkManager

# 启用Network并设置开机启动

systemctl enable network && systemctl start network

设置静态IP

编辑网卡配置文件

vim /etc/sysconfig/network-scripts/ifcfg-eth0

修改并添加以下内容

BOOTPROTO=static

ONBOOT=yes

IPADDR=172.16.10.100

NETMASK=255.255.255.0

GATEWAY=172.16.0.1

重启网络

systemctl restart network

修改主机名称

# 修改控制节点

hostnamectl set-hostname controller

# 修改计算节点compute-101

hostnamectl set-hostname compute-101

# 修改计算节点compute-102

hostnamectl set-hostname compute-102

修改hosts文件

添加以下内容

172.16.10.100 controller

172.16.10.101 compute-101

172.16.10.102 compute-102

关闭防火墙

systemctl stop firewalld && systemctl disable firewalld

安装基础服务

全部节点都需要操作。

升级软件包

yum upgrade -y

安装时间同步服务chronyd

yum install chrony -y

计算节点修改配置文件 /etc/chrony.conf 中的 pool 2.centos.pool.ntp.org iburst 为 server controller iburst 直接与控制节点同步时间。

重启chrony服务并开机自启

systemctl restart chronyd && systemctl enable chronyd

安装openstack存储库

yum config-manager --enable powertools

yum install centos-release-openstack-victoria -y

安装openstack客户端和openstack-selinux

yum install python3-openstackclient openstack-selinux -y

禁用selinux

cat>/etc/selinux/config<<EOF

SELINUX=permissive

SELINUXTYPE=targeted

setenforce 0

EOF

修改用户权限

echo "neutron ALL = (root) NOPASSWD: ALL" > /etc/sudoers.d/neutron

echo "nova ALL = (root) NOPASSWD: ALL" > /etc/sudoers.d/nova

控制节点

安装数据库

安装Mariadb数据库,也可安装MySQL数据库。

yum install mariadb mariadb-server python3-PyMySQL -y创建和编辑

vim /etc/my.cnf.d/openstack.cnf文件,添加如下信息[mysqld] bind-address = 0.0.0.0 default-storage-engine = innodb innodb_file_per_table = on max_connections = 4096 collation-server = utf8_general_ci character-set-server = utf8启动数据库并设置为开机自启

systemctl start mariadb && systemctl enable mariadb配置数据库

mysql_secure_installation # 输入当前用户root密码,若为空直接回车 Enter current password for root (enter for none): OK, successfully used password, moving on... # 是否设置root密码 Set root password? [Y/n] y # 输入新密码 New password: # 再次输入新密码 Re-enter new password: # 是否删除匿名用户 Remove anonymous users? [Y/n] y # 是否禁用远程登录 Disallow root login remotely? [Y/n] n # 是否删除测试数据库 Remove test database and access to it? [Y/n] y # 是否重新加载权限表 Reload privilege tables now? [Y/n] y # 以上步骤根据实际情况做配置即可,不一定要与此处保持一致

安装消息队列

安装软件包

yum install rabbitmq-server -y启动消息队列服务并设置为开机自启

systemctl start rabbitmq-server && systemctl enable rabbitmq-server添加openstack用户并设置密码

rabbitmqctl add_user openstack RABBIT_PASS给openstack用户可读可写可配置权限

rabbitmqctl set_permissions openstack ".*" ".*" ".*"为了方便监控,启用Web界面管理插件

rabbitmq-plugins enable rabbitmq_management浏览器访问 IP:15672,使用 guest/guest 登录rabbitmq

安装Memcached缓存

安装软件包

yum install memcached python3-memcached -y编辑

vim /etc/sysconfig/memcached文件,将OPTTONS行修改成如下信息OPTIONS="-l 127.0.0.1,::1,controller"启动Memcached服务并设置开机自启

systemctl start memcached && systemctl enable memcached

安装KeyStone服务

创建数据库

连接数据库

mysql -u root -p创建keystone数据库

CREATE DATABASE keystone;授予keystone数据库权限,然后退出

GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'localhost' IDENTIFIED BY 'KEYSTONE_DBPASS'; GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'%' IDENTIFIED BY 'KEYSTONE_DBPASS'; exit;

安装软件包

安装软件

yum install openstack-keystone httpd python3-mod_wsgi -y修改配置文件

cat > /etc/keystone/keystone.conf <<EOF [DEFAULT] [database] connection = mysql+pymysql://keystone:KEYSTONE_DBPASS@controller/keystone [token] provider = fernet EOF初始化数据库

su -s /bin/sh -c "keystone-manage db_sync" keystone初始化Fernet

keystone-manage fernet_setup --keystone-user keystone --keystone-group keystone keystone-manage credential_setup --keystone-user keystone --keystone-group keystone引导身份认证服务

keystone-manage bootstrap --bootstrap-password ADMIN_PASS \ --bootstrap-admin-url http://controller:5000/v3/ \ --bootstrap-internal-url http://controller:5000/v3/ \ --bootstrap-public-url http://controller:5000/v3/ \ --bootstrap-region-id RegionOne

配置Apache HTTP服务

修改

vim /etc/httpd/conf/httpd.conf文件,添加如下信息ServerName controller创建软链接

ln -s /usr/share/keystone/wsgi-keystone.conf /etc/httpd/conf.d/启动httpd服务 并设置开机自启

systemctl start httpd && systemctl enable httpd创建环境变量脚本

cat > admin-openrc <<EOF export OS_USERNAME=admin export OS_PASSWORD=ADMIN_PASS export OS_PROJECT_NAME=admin export OS_USER_DOMAIN_NAME=Default export OS_PROJECT_DOMAIN_NAME=Default export OS_AUTH_URL=http://controller:5000/v3 export OS_IDENTITY_API_VERSION=3 export OS_IMAGE_API_VERSION=2 EOF执行命令

source admin-openrc或者. admin-openrc使环境变量生效。

创建域、项目、用户和角色

创建域,程序中已存在默认域,此命令只是一个创建域的例子,可以不执行

openstack domain create --description "An Example Domain" example创建service项目,也叫做租户

openstack project create --domain default --description "Service Project" service验证token令牌

openstack token issue

安装Glance服务

创建数据库

连接数据库

mysql -u root -p创建glance数据库

CREATE DATABASE glance;授予glance数据库权限,然后退出

GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'localhost' IDENTIFIED BY 'GLANCE_DBPASS'; GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'%' IDENTIFIED BY 'GLANCE_DBPASS'; exit;

创建glance用户并关联角色

创建glance用户并设置密码为GLANCE_PASS,此处与上面创建用户的不同之处是未使用交互式的方式,直接将密码放入了命令中

openstack user create --domain default --password GLANCE_PASS glance使用admin角色将Glance用户添加到服务项目中

# 在service的项目上给glance用户关联admin角色 openstack role add --project service --user glance admin

创建glance服务并注册API

创建glance服务

openstack service create --name glance --description "OpenStack Image" image注册API,也就是创建镜像服务的API终端endpoints

openstack endpoint create --region RegionOne image public http://controller:9292 openstack endpoint create --region RegionOne image internal http://controller:9292 openstack endpoint create --region RegionOne image admin http://controller:9292

安装并配置glance

安装软件包

dnf install openstack-glance -y修改配置文件

cat > /etc/glance/glance-api.conf<<EOF [database] connection = mysql+pymysql://glance:GLANCE_DBPASS@controller/glance [keystone_authtoken] www_authenticate_uri = http://controller:5000 auth_url = http://controller:5000 memcached_servers = controller:11211 auth_type = password project_domain_name = Default user_domain_name = Default project_name = service username = glance password = GLANCE_PASS [paste_deploy] flavor = keystone [glance_store] stores = file,http default_store = file filesystem_store_datadir = /var/lib/glance/images/ EOF同步数据库

su -s /bin/sh -c "glance-manage db_sync" glance启动glance服务并设置开机自启

systemctl start openstack-glance-api && systemctl enable openstack-glance-api

安装Placement服务

创建数据库

连接数据库

mysql -u root -p创建Plancement数据库

CREATE DATABASE placement;授予Plancement数据库权限,然后退出

GRANT ALL PRIVILEGES ON placement.* TO 'placement'@'localhost' IDENTIFIED BY 'PLACEMENT_DBPASS'; GRANT ALL PRIVILEGES ON placement.* TO 'placement'@'%' IDENTIFIED BY 'PLACEMENT_DBPASS'; exit;

配置用户和Endpoint

创建一个plancement用户并设置密码为PLACEMENT_PASS

openstack user create --domain default --password PLACEMENT_PASS placement使用admin角色将Placement用户添加到服务项目中

# 在service的项目上给placement用户关联admin角色 openstack role add --project service --user placement admin

创建Placement服务并注册API

创建Plancement服务

openstack service create --name placement --description "Placement API" placement创建Plancement服务API端口

openstack endpoint create --region RegionOne placement public http://controller:8778 openstack endpoint create --region RegionOne placement internal http://controller:8778 openstack endpoint create --region RegionOne placement admin http://controller:8778

安装Placement服务

安装Plancement软件包

yum install openstack-placement-api -y修改配置文件

cat > /etc/placement/placement.conf <<EOF [placement_database] connection = mysql+pymysql://placement:PLACEMENT_DBPASS@controller/placement [api] auth_strategy = keystone [keystone_authtoken] auth_url = http://controller:5000/v3 memcached_servers = controller:11211 auth_type = password project_domain_name = Default user_domain_name = Default project_name = service username = placement password = PLACEMENT_PASS EOF同步数据库

su -s /bin/sh -c "placement-manage db sync" placement重启httpd服务

systemctl restart httpd检查Placement服务状态

placement-status upgrade check

安装Nova服务

创建数据库

连接数据库

mysql -u root -p创建nova_api,nova和nova_cell0数据库

CREATE DATABASE nova_api; CREATE DATABASE nova; CREATE DATABASE nova_cell0;分别授予三个数据库权限,然后退出

# 授权nova_api数据库 GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'localhost' IDENTIFIED BY 'NOVA_DBPASS'; GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'%' IDENTIFIED BY 'NOVA_DBPASS'; # 授权nova数据库 GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'localhost' IDENTIFIED BY 'NOVA_DBPASS'; GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'%' IDENTIFIED BY 'NOVA_DBPASS'; # 授权nova_cell0数据库 GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'localhost' IDENTIFIED BY 'NOVA_DBPASS'; GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'%' IDENTIFIED BY 'NOVA_DBPASS'; exit;

配置用户和Endpoint

创建nova用户并设置密码为NOVA_PASS

openstack user create --domain default --password NOVA_PASS nova使用admin角色将nova用户添加到服务项目中

# 在service的项目上给nova用户关联admin角色 openstack role add --project service --user nova admin

创建Nova服务并注册API

创建Nova服务

openstack service create --name nova --description "OpenStack Compute" compute创建Nova服务API端口

openstack endpoint create --region RegionOne compute public http://controller:8774/v2.1 openstack endpoint create --region RegionOne compute internal http://controller:8774/v2.1 openstack endpoint create --region RegionOne compute admin http://controller:8774/v2.1

安装并配置Nova

安装nova相关软件包

yum install openstack-nova-api openstack-nova-conductor openstack-nova-novncproxy openstack-nova-scheduler -y修改配置文件

cat > /etc/nova/nova.conf <<EOF [DEFAULT] enabled_apis = osapi_compute,metadata transport_url = rabbit://openstack:RABBIT_PASS@controller:5672/ my_ip = 172.16.10.100 [api_database] connection = mysql+pymysql://nova:NOVA_DBPASS@controller/nova_api [database] connection = mysql+pymysql://nova:NOVA_DBPASS@controller/nova [api] auth_strategy = keystone [keystone_authtoken] www_authenticate_uri = http://controller:5000/ auth_url = http://controller:5000/ memcached_servers = controller:11211 auth_type = password project_domain_name = Default user_domain_name = Default project_name = service username = nova password = NOVA_PASS [vnc] enabled = true server_listen = $my_ip server_proxyclient_address = $my_ip [glance] api_servers = http://controller:9292 [oslo_concurrency] lock_path = /var/lib/nova/tmp [placement] region_name = RegionOne project_domain_name = Default project_name = service auth_type = password user_domain_name = Default auth_url = http://controller:5000/v3 username = placement password = PLACEMENT_PASS同步数据库

# 同步nova_api数据库 su -s /bin/sh -c "nova-manage api_db sync" nova # 同步nova_cell0数据库 su -s /bin/sh -c "nova-manage cell_v2 map_cell0" nova # 创建cell1 su -s /bin/sh -c "nova-manage cell_v2 create_cell --name=cell1 --verbose" nova # 同步nova数据库 su -s /bin/sh -c "nova-manage db sync" nova验证nova_cell0和cell1是否添加成功

su -s /bin/sh -c "nova-manage cell_v2 list_cells" nova启动服务并设为开机自启

systemctl enable openstack-nova-api openstack-nova-scheduler openstack-nova-conductor openstack-nova-novncproxy systemctl start openstack-nova-api openstack-nova-scheduler openstack-nova-conductor openstack-nova-novncproxy使用命令

nova service-list验证服务是否启动成功

安装neutron服务

创建数据库

连接数据库

mysql -u root -p创建neutron数据库

CREATE DATABASE neutron;授予数据库权限,然后退出

GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'localhost' IDENTIFIED BY 'NEUTRON_DBPASS'; GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'%' IDENTIFIED BY 'NEUTRON_DBPASS'; exit;

配置用户和Endpoint

创建neutron用户并设置密码为NEUTRON_PASS

openstack user create --domain default --password NEUTRON_PASS neutron使用admin角色将neutron用户添加到服务项目中

# 在service的项目上给neutron用户关联admin角色 openstack role add --project service --user neutron admin

创建Neutron服务并注册API

创建Neutron服务

openstack service create --name neutron --description "OpenStack Networking" network创建Neutron服务API端口

openstack endpoint create --region RegionOne network public http://controller:9696 openstack endpoint create --region RegionOne network internal http://controller:9696 openstack endpoint create --region RegionOne network admin http://controller:9696

安装并配置neutron服务

安装neutron相关软件包

yum install openstack-neutron openstack-neutron-ml2 openstack-neutron-openvswitch python-neutronclient openstack-neutron-fwaas -y修改配置文件

cat > /etc/neutron/neutron.conf <<EOF [database] connection = mysql+pymysql://neutron:NEUTRON_DBPASS@controller/neutron [DEFAULT] core_plugin = ml2 service_plugins = transport_url = rabbit://openstack:RABBIT_PASS@controller auth_strategy = keystone notify_nova_on_port_status_changes = true notify_nova_on_port_data_changes = true [keystone_authtoken] www_authenticate_uri = http://controller:5000 auth_url = http://controller:5000 memcached_servers = controller:11211 auth_type = password project_domain_name = default user_domain_name = default project_name = service username = neutron password = NEUTRON_PASS [nova] auth_url = http://controller:5000 auth_type = password project_domain_name = default user_domain_name = default region_name = RegionOne project_name = service username = nova password = NOVA_PASS [oslo_concurrency] lock_path = /var/lib/neutron/tmp EOF配置ML2组件

cat > /etc/neutron/plugins/ml2/ml2_conf.ini <<EOF [ml2] type_drivers = flat,vlan tenant_network_types = vlan mechanism_drivers = openvswitch debug=True [ml2_type_flat] [ml2_type_vlan] network_vlan_ranges =physnet1:1000:1999,physnet2 [ml2_type_vxlan] [securitygroup] enable_security_group = True enable_ipset = True firewall_driver = neutron.agent.linux.iptables_firewall.OVSHybridIptablesFirewallDriver [ovs] tenant_network_type = vlan bridge_mappings = physnet1:br-vlan,physnet2:br-ex EOF配置L3代理商

cat> /etc/neutron/l3_agent.ini<<EOF [DEFAULT] interface_driver = neutron.agent.linux.interface.OVSInterfaceDriver router_delete_namespaces = True external_network_bridge = verbose = True [fwaas] driver=neutron_fwaas.services.firewall.drivers.linux.iptables_fwaas.IptablesFwaasDriver enabled = True [agent] extensions = fwaas [ovs] EOF配置DHCP代理商

cat>/etc/neutron/dhcp_agent.ini<<EOF [DEFAULT] debug=True interface_driver = neutron.agent.linux.interface.OVSInterfaceDriver dhcp_driver = neutron.agent.linux.dhcp.Dnsmasq enable_isolated_metadata = True dnsmasq_config_file = /etc/neutron/dnsmasq-neutron.conf EOF echo "dhcp-option-force=26,1454" >/etc/neutron/dnsmasq-neutron.conf配置metadata代理器

cat> /etc/neutron/metadata_agent.ini <<EOF [DEFAULT] nova_metadata_host = controller metadata_proxy_shared_secret = METADATA_SECRET memcache_servers = controller:11211 EOF配置OVS组件

cat > /etc/neutron/plugins/ml2/openvswitch_agent.ini <<EOF [DEFAULT] [agent] [ovs] bridge_mappings = physnet1:br-vlan,physnet2:br-ex [securitygroup] firewall_driver=neutron.agent.linux.iptables_firewall.OVSHybridIptablesFirewallDriver enable_security_group=true [xenapi] EOF配置OVS交换机

systemctl enable openvswitch.service --now systemctl status openvswitch.service # 用于联通虚拟机的网桥 ovs-vsctl add-br br-vlan ovs-vsctl add-port br-vlan eth1 # 用于联通外部网络的网桥 ovs-vsctl add-br br-ex ovs-vsctl add-port br-ex eth2配置软链接

ln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini启动相关服务

systemctl enable neutron-openvswitch-agent neutron-l3-agent neutron-dhcp-agent neutron-metadata-agent neutron-ovs-cleanup openvswitch systemctl start neutron-openvswitch-agent neutron-l3-agent neutron-dhcp-agent neutron-metadata-agent openvswitch systemctl status neutron-openvswitch-agent neutron-l3-agent neutron-dhcp-agent neutron-metadata-agent openvswitch测试

. admin-openrc openstack network agent list创建提供商网络(外部网络)

provider-physical-network表示使用的物理网络,与网络节点/etc/neutron/plugin.ini中的配置一致。. admin-openrc # 添加默认的端口安全组规则 openstack security group rule create --proto icmp default openstack security group rule create --proto tcp --dst-port 22 default openstack security group rule create --proto tcp --dst-port 3389 default openstack security group rule create --proto tcp --dst-port 80 default openstack security group rule create --proto tcp --dst-port 443 default openstack security group rule create --proto tcp --dst-port 123 default openstack security group rule create --proto tcp --dst-port 53 default openstack security group rule create --proto udp --dst-port 123 default openstack security group rule create --proto udp --dst-port 53 default openstack network create --share --external \ --provider-physical-network physnet2 \ --provider-network-type flat provider # 子网需要与真实环境一致 openstack subnet create --network provider \ --allocation-pool start=192.168.68.10,end=192.168.68.250 \ --dns-nameserver 1.2.4.8 --gateway 192.168.68.1 \ --subnet-range 192.168.68.0/24 provider

计算节点

安装nova组件

安装软件包

yum install openstack-nova-compute -y修改配置文件

需手动修改vnc下的novncproxy_base_url

cat > /etc/nova/nova.conf <<EOF [DEFAULT] enabled_apis = osapi_compute,metadata transport_url = rabbit://openstack:RABBIT_PASS@controller my_ip = 172.16.10.101 [api] auth_strategy = keystone [keystone_authtoken] www_authenticate_uri = http://controller:5000/ auth_url = http://controller:5000/ memcached_servers = controller:11211 auth_type = password project_domain_name = Default user_domain_name = Default project_name = service username = nova password = NOVA_PASS [neutron] auth_url = http://controller:5000 auth_type = password project_domain_name = default user_domain_name = default region_name = RegionOne project_name = service username = neutron password = NEUTRON_PASS service_metadata_proxy = true metadata_proxy_shared_secret = METADATA_SECRET [vnc] enabled = true server_listen = 0.0.0.0 server_proxyclient_address = $my_ip novncproxy_base_url = http://172.16.10.100:6080/vnc_auto.html [glance] api_servers = http://controller:9292 [oslo_concurrency] lock_path = /var/lib/nova/tmp [placement] region_name = RegionOne project_domain_name = Default project_name = service auth_type = password user_domain_name = Default auth_url = http://controller:5000/v3 username = placement password = PLACEMENT_PASS [libvirt] virt_type=qemu EOF启动服务

systemctl enable libvirtd.service openstack-nova-compute.service --now systemctl status libvirtd.service openstack-nova-compute.service控制端验证nova节点信息

. admin-openrc su -s /bin/sh -c "nova-manage cell_v2 discover_hosts --verbose" nova openstack hypervisor list openstack compute service list openstack catalog list nova-status upgrade check

安装neutron组件

安装软件包

yum install -y openstack-neutron openstack-neutron-ml2 openstack-neutron-openvswitch修改配置文件

cat > /etc/neutron/neutron.conf <<EOF [DEFAULT] transport_url = rabbit://openstack:RABBIT_PASS@controller auth_strategy = keystone [keystone_authtoken] www_authenticate_uri = http://controller:5000 auth_url = http://controller:5000 memcached_servers = controller:11211 auth_type = password project_domain_name = default user_domain_name = default project_name = service username = neutron password = NEUTRON_PASS [oslo_concurrency] lock_path = /var/lib/neutron/tmp EOF配置OVS网桥

systemctl enable openvswitch --now systemctl status openvswitch ovs-vsctl add-br br-vlan ovs-vsctl add-port br-vlan eth1配置neutron ovs组件

cat>/etc/neutron/plugins/ml2/openvswitch_agent.ini<<EOF [DEFAULT] [agent] [ovs] bridge_mappings = physnet1:br-vlan [securitygroup] firewall_driver=neutron.agent.linux.iptables_firewall.OVSHybridIptablesFirewallDriver enable_security_group=True [xenapi] EOF启动服务

# 重启nova-compute systemctl restart openstack-nova-compute systemctl enable neutron-openvswitch-agent --now systemctl status neutron-openvswitch-agent

访问dashboard

使用浏览器访问 http://172.16.10.100/dashboard,使用admin/ADMIN_PASS登录系统。